Enterprise versus Client SSD

A growing number of data centers requiring high data throughput and low transaction latency that were previously reliant on hard disk drives (HDD) in their servers are now running into performance bottlenecks. They are looking to solid-state drives (SSDs) as a solution to increase their data center performance, efficiency and reliability, as well as lowering overall operating expenses (OpEx).

To understand the differences between SSD classes, we distinguish the two key components of an SSD – the flash storage controller (or simply the SSD controller) and the non-volatile NAND flash memory used to store data.

In today’s market, SSD and NAND flash memory consumption are split into three main groups:

- Consumer devices (tablets, cameras, mobile phones),

- Client systems (netbook, notebook, Ultrabook, AIO, desktop personal computers), embedded/industrial (gaming kiosk, purpose-built system, digital signage)

- Enterprise computing platforms (HPC, data center servers).

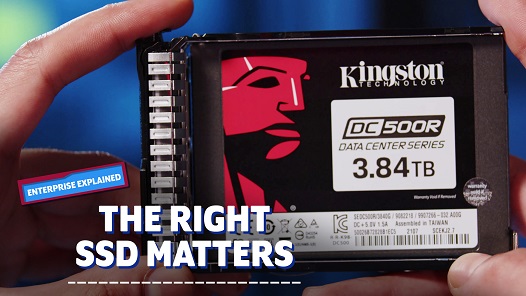

Choosing the right SSD storage device for enterprise data centers can be a long and arduous process of learning and qualifying a multitude of different SSD vendors and product types, as not all SSDs and NAND flash memory are created equal.

SSDs are manufactured to be easily deployable as a replacement for or complement to HDDs and are available in several different form factors, including 2.5", communication protocol / interfaces including Serial ATA (SATA), Serial Attached SCSI (SAS) and, more recently, NVMe PCIe to transfer data to and from the central processing unit (CPU) of a server.

Being easily deployable, however, does not guarantee that all SSDs will be suitable in the long term for the enterprise application in which they were selected. The cost of choosing the wrong SSD can often negate any initial cost-savings and performance benefits gained when the SSDs are either worn out prematurely due to excessive writes, achieve far lower sustained write performance over their expected life time or introduce additional latency in the storage array and thus require early field replacement.

We will discuss the three main qualities that distinguish an enterprise- and client-class SSD to assist in making the right purchasing decision when the time comes to replace or add storage to a server.